Deep Learning Foundations and Concepts

Deep learning is a subset of machine learning that has gained immense popularity in recent years. It involves training deep neural networks to learn and make predictions from large amounts of data. This article will provide an overview of the foundations and concepts behind deep learning, helping you understand its fundamental principles and applications.

Key Takeaways:

- Deep learning is a subset of machine learning that focuses on training deep neural networks.

- Deep neural networks are composed of multiple layers of interconnected nodes or neurons.

- Deep learning has been successfully applied to various domains, including computer vision, natural language processing, and speech recognition.

- The foundations of deep learning include artificial neural networks, backpropagation, and gradient descent.

- Deep learning models require large amounts of training data and computing power.

Deep learning models are based on artificial neural networks (ANNs), which are inspired by the structure and functioning of the human brain. ANNs consist of interconnected nodes or neurons organized into layers. Each neuron receives input from the previous layer, performs calculations, and passes the output to the next layer. The strength of the connections, or weights, between the neurons determines how the information is processed and flows through the network.

Deep learning models are highly effective in solving complex problems by automatically learning hierarchical representations of data.

Training deep neural networks involves backpropagation, a process by which the network learns from its mistakes. During training, the neural network makes predictions on the input data, and the predicted outputs are compared to the actual outputs. The discrepancy between the predicted and actual outputs is quantified using a loss function. Backpropagation calculates the gradients of the loss function with respect to the network’s weights, and these gradients are used to update the weights through an optimization algorithm, such as gradient descent.

Backpropagation enables deep neural networks to adjust their weights and improve their predictions over time.

Deep Learning Applications

Deep learning has been successfully applied to various domains, revolutionizing many industries. Some of the notable applications include:

- Computer vision: Deep learning algorithms have enabled breakthroughs in image classification, object detection, and autonomous driving.

- Natural language processing: Deep learning models have advanced language translation, sentiment analysis, and chatbot technology.

- Speech recognition: Deep learning techniques have significantly improved speech recognition accuracy, enabling voice assistants like Siri and Alexa.

- Medical diagnosis: Deep learning models have been used to detect diseases from medical images and analyze patient data for personalized treatments.

Deep Learning Foundations

Let’s delve deeper into the key concepts that form the foundations of deep learning:

- Artificial Neural Networks (ANNs): ANNs are the building blocks of deep learning models and mimic the interconnected neurons in the human brain.

- Activation Functions: Activation functions introduce non-linearities into the neural network, enabling it to learn complex relationships between inputs and outputs.

- Loss Functions: Loss functions quantify the difference between predicted and actual outputs, guiding the optimization process during training.

Activation functions allow neural networks to capture complex patterns in the data, while loss functions guide the learning process.

Deep Learning Models

Deep learning models are structured architectures composed of multiple layers. The most common types of deep learning models include:

| Model | Description |

|---|---|

| Feedforward Neural Networks | Neurons are organized into sequential layers, with information flowing in one direction, from input to output. |

| Convolutional Neural Networks | Designed for processing grid-like data, such as images, using convolutional and pooling operations. |

Deep learning models, such as convolutional neural networks, have revolutionized image and video processing.

Challenges and Future Directions

Despite its remarkable successes, deep learning still faces several challenges. Some of these challenges include:

- Large amounts of training data are required for deep learning models

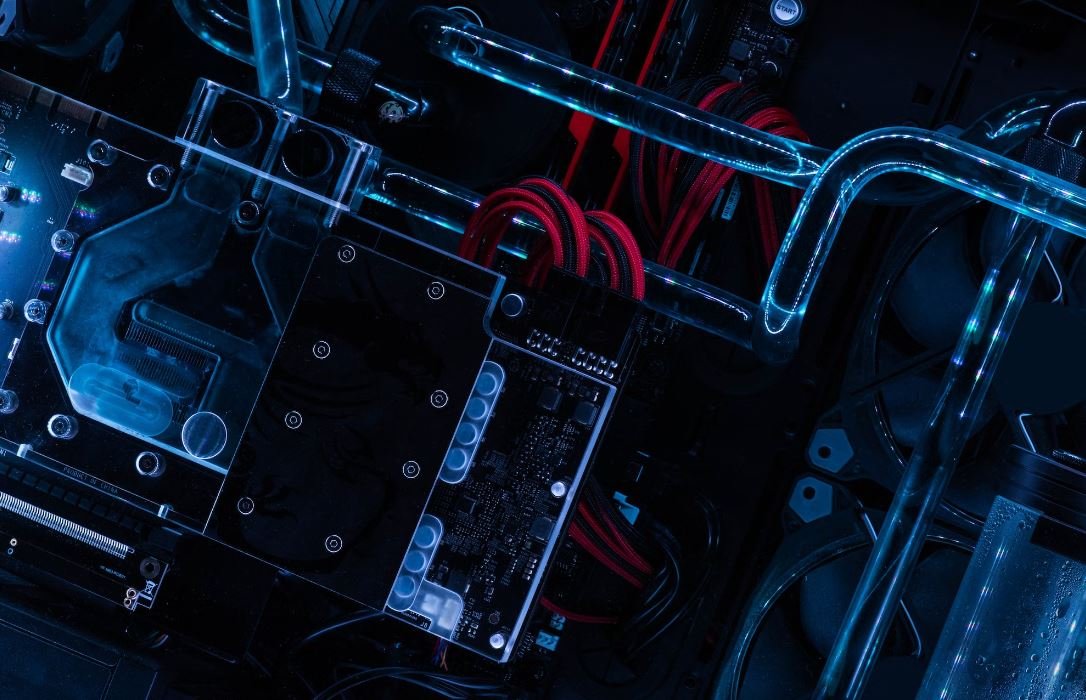

- Deep learning models are computationally intensive and require powerful hardware resources

- Interpretability and explainability of deep learning models is often limited

The future of deep learning lies in developing more efficient algorithms, improving interpretability, and addressing ethical concerns.

Conclusion

Deep learning, a subset of machine learning, utilizes deep neural networks to learn and make predictions from large datasets. Its applications span various fields, including computer vision, natural language processing, and medical diagnosis. The foundations of deep learning, which include artificial neural networks, backpropagation, and optimization algorithms, have paved the way for its success. Despite existing challenges, deep learning continues to evolve and shape the future of AI.

Common Misconceptions

Misconception 1: Deep learning is only useful for complex tasks

One common misconception about deep learning is that it is only useful for solving complex problems or tasks. However, deep learning can actually be applied to a wide range of tasks, including simple ones.

- Deep learning commonly used for image and speech recognition, which can be seen as complex tasks.

- However, deep learning also finds applications in more straightforward tasks such as text classification or sentiment analysis.

- It can be a valuable tool even when dealing with less complex problems due to its ability to automatically learn features from raw data.

Misconception 2: Deep learning requires vast amounts of data

Another common misconception is that deep learning algorithms require massive amounts of data to be effective. While it is true that deep learning models often benefit from more data, they can still perform well with smaller datasets in certain scenarios.

- While big data is ideal, deep learning can still yield good results with smaller datasets by leveraging transfer learning techniques.

- Pre-trained models can be used to solve similar problems with limited labeled data, reducing the need for a large dataset.

- Furthermore, data augmentation techniques can be employed to artificially increase the size of the training set.

Misconception 3: Deep learning is a black box

Many people believe that deep learning models are completely black boxes, making it impossible to understand how they arrive at their decisions. While it can be challenging to interpret the inner workings of deep learning models, efforts are being made to improve explainability.

- Methods such as activation maximization can be used to visualize what features a deep learning model focuses on.

- Research into interpretability aims to uncover the decision-making processes of deep learning models and make them more transparent.

- Though not fully solved, strides are being made towards understanding and explaining deeply learned representations.

Misconception 4: Deep learning replaces human intelligence

There is a common misconception that deep learning aims to replicate human intelligence and ultimately replace human involvement in various tasks. However, this is not the case, as deep learning is primarily a tool that complements human intelligence.

- Deep learning algorithms are designed to automate certain tasks, but they still require human guidance in model design, problem formulation, and data preprocessing.

- Human judgment and expertise are crucial for training models, evaluating their results, and making decisions based on their outputs.

- Deep learning can be seen as a powerful tool to support human intelligence and improve decision-making processes.

Misconception 5: Deep learning is a magical solution to all problems

Deep learning has gained significant attention and has achieved remarkable successes in various domains. However, it is important to understand that deep learning is not a universal solution applicable to every problem.

- While deep learning can excel in complex pattern recognition, it may not be the best choice for problems with limited amounts of data or problems that require reasoning and logic.

- Understanding the limitations of deep learning and selecting appropriate models and algorithms for each problem is crucial.

- Other machine learning techniques, such as decision trees or linear regression, may be more suitable depending on the nature of the problem.

Foundations of Deep Learning

Deep learning refers to a subset of machine learning algorithms based on artificial neural networks with multiple layers. These neural networks are designed to mimic the functioning of the human brain, allowing them to identify patterns and make predictions. The following tables provide insights into the foundations and concepts involved in deep learning.

1. Activation Functions in Neural Networks

An activation function determines the output of a neural network. It allows artificial neurons to provide nonlinear responses, enabling more complex and accurate learning. Some commonly used activation functions are:

| Activation Function | Formula | Use Case |

|————————–|—————————|————————————————-|

| Sigmoid | 1 / (1 + e^(-x)) | Binary classification, output probability |

| ReLU (Rectified Linear Unit) | max(0, x) | Hidden layers, faster convergence |

| Tanh | (e^x – e^(-x)) / (e^x + e^(-x)) | Hidden layers, output probability, gradient stabilization |

| Softmax | e^(xi) / (∑e^(xi)) | Multiclass classification, output probability |

2. Common Deep Learning Architectures

Deep learning relies on various architectures that determine the arrangement and connectivity of neural network layers. Here are some widely used architectures:

| Architecture | Description |

|————————-|—————————————————————————————————————————————————————————-|

| Feedforward Neural Network (FNN) | Traditional neural network where information flows in one direction from input to output. |

| Convolutional Neural Network (CNN) | Especially effective for image and video analysis, CNN applies filters to detect localized patterns and extracts features through convolution and pooling layers. |

| Recurrent Neural Network (RNN) | Suitable for sequential data, RNN utilizes hidden states to capture temporal dependencies between inputs and produces output taking into account previous contexts. |

| Generative Adversarial Network (GAN) | Composed of a generator and a discriminator, GAN generates synthetic data from random noise, aiming to deceive the discriminator and achieve high-quality generated outputs. |

3. Key Deep Learning Algorithms

Deep learning algorithms drive the learning process within neural networks. Here are some fundamental algorithms:

| Algorithm | Description |

|————————|————————————————————————————————————|

| Backpropagation | Calculates gradients of error with respect to each weight in the network, allowing for iterative optimization of network weights. |

| Stochastic Gradient Descent (SGD) | Iteratively updates network weights based on a random sample (minibatch) of the training data, typically more efficient than full batch gradient descent. |

| Recurrent Neural Networks (RNN) | Utilizes feedback connections to allow previous outputs to be inputs to the network at later time steps, enabling modeling of sequential and time-dependent data. |

| Long Short-Term Memory (LSTM) | A type of RNN that selectively remembers and forgets information over time, effectively addressing the vanishing gradient problem and improving long-range predictions. |

4. Deep Learning Frameworks

Deep learning frameworks provide libraries and tools to simplify the development and execution of deep learning models. Here are some widely used frameworks:

| Framework | Description |

|——————-|—————————————————————————————————|

| TensorFlow | Google’s open-source platform offering comprehensive support for deep learning and ML applications.|

| PyTorch | Developed by Facebook’s AI Research (FAIR), PyTorch is popular due to its dynamic computational graph and ease of use. |

| Keras | A high-level neural networks API running on top of TensorFlow, Keras offers a simplified interface for building and training models.|

| Caffe | Efficient and modular, Caffe is particularly suited for convolutional neural networks and computer vision tasks.|

5. Popular Deep Learning Applications

Deep learning finds application in various domains due to its ability to extract meaningful information from complex data. Here are some popular applications:

| Application | Description |

|————————|——————————————————————————————————————————-|

| Image Classification | Deep learning enables accurate categorization of images, aiding tasks such as object recognition and autonomous driving systems. |

| Natural Language Processing (NLP) | NLP algorithms leverage deep learning to process, understand, and generate human language to improve speech recognition, machine translation, chatbots, and sentiment analysis. |

| Recommender Systems | By analyzing user preferences, deep learning models can suggest personalized recommendations for movies, products, or articles. |

| Medical Diagnosis | Deep learning assists in diagnosing diseases by analyzing medical images, such as X-rays and MRIs, allowing for early detection. |

6. Ethical Considerations in Deep Learning

Deep learning raises ethical concerns regarding data privacy, biases, and accountability. It is crucial to address these issues for responsible development and deployment of deep learning models.

7. Deep Learning Advantages

Deep learning offers several advantages over traditional machine learning, including:

| Advantage | Description |

|————————–|—————————————————————————————————-|

| Automatic Feature Extraction | Deep learning models learn to extract relevant features, diminishing the need for manual feature engineering. |

| Scalability | Deep learning scales well with larger datasets, enabling more accurate predictions as the available data increases.|

| Complex Relationship Modeling | Deep learning can capture intricate patterns and dependencies within data that may be difficult for other algorithms to discern. |

8. Deep Learning Challenges

While deep learning is powerful, it also faces various challenges:

| Challenge | Description |

|————————–|—————————————————————————————————————-|

| High Computational Demands | Training deep learning models can be computationally intensive, requiring dedicated hardware resources such as GPUs. |

| Need for Large Amounts of Labeled Data | Deep learning generally requires substantial labeled data for effective training, which may not always be readily available.|

| Interpretability and Explainability | Deep learning models can be perceived as black boxes, making it challenging to interpret their decision-making processes and explain results.|

9. Future of Deep Learning

The future of deep learning holds immense possibilities, driving advancements in various fields:

| Field | Potential Applications |

|———————|———————————————————————————————————————-|

| Healthcare | Improving disease diagnosis, predicting patient outcomes, personalizing treatment plans. |

| Autonomous Vehicles | Enhancing object detection and recognition, optimizing navigation systems, improving road safety. |

| Finance | Enhancing fraud detection, predicting market trends, optimizing trading strategies. |

| Robotics | Enabling more intuitive human-robot interactions, enhancing autonomous decision-making capabilities. |

10. Conclusion

Deep learning has revolutionized artificial intelligence by allowing computers to learn from large amounts of data and make complex predictions. With its powerful algorithms, architectures, and frameworks, deep learning finds application in various domains. However, ethical considerations, challenges, and the need for large labeled datasets remain important aspects for further research and development in this field.

Frequently Asked Questions

Deep Learning Foundations and Concepts

What is deep learning?

Deep learning is a subfield of machine learning that involves training neural networks with multiple layers to learn and find patterns in large datasets. It relies on artificial neural networks, inspired by the structure and function of the human brain, to process and understand complex data.

What are the key concepts in deep learning?

The key concepts in deep learning include artificial neural networks, activation functions, backpropagation, convolutional neural networks (CNNs), recurrent neural networks (RNNs), and generative adversarial networks (GANs). These concepts form the basis for building and training deep learning models.

How do artificial neural networks work?

Artificial neural networks are composed of interconnected nodes called neurons. Each neuron takes inputs, applies weights to them, sums them up, and passes the result through an activation function to produce an output. This process is repeated across multiple layers, allowing the network to learn complex relationships between inputs and outputs.

What is backpropagation?

Backpropagation is a key algorithm used to train deep learning models. It involves calculating the gradient of the model’s loss function with respect to the weights and biases in the network. This gradient is then used to update the weights and biases iteratively, allowing the model to minimize the loss and improve its predictions.

How do convolutional neural networks (CNNs) work?

Convolutional neural networks (CNNs) are a specialized type of artificial neural networks designed for image analysis and recognition tasks. CNNs apply convolutional filters to input images, allowing them to learn spatial hierarchies and extract meaningful features automatically. These networks are widely used in computer vision applications.

What are recurrent neural networks (RNNs) used for?

Recurrent neural networks (RNNs) are used for handling sequential and time-series data, where the current data point depends on the previous ones. RNNs have feedback connections that allow information to loop back, making them effective for tasks such as natural language processing, speech recognition, and sentiment analysis.

What are generative adversarial networks (GANs)?

Generative adversarial networks (GANs) consist of two neural networks: a generator and a discriminator. The generator generates synthetic data samples, while the discriminator tries to distinguish between real and fake samples. The networks are trained together, with the generator learning to produce increasingly realistic samples, and the discriminator getting better at identifying fakes.

How is deep learning applied in real-world applications?

Deep learning is applied in various real-world applications, including image and speech recognition, natural language processing, recommendation systems, autonomous vehicles, healthcare diagnostics, and fraud detection. Its ability to learn complex patterns from large datasets makes it a powerful tool in many domains.

What are the challenges of deep learning?

Some challenges of deep learning include the need for large labeled datasets, high computational requirements, potential overfitting of the model to the training data, interpretability of the learned representations, and robustness to adversarial attacks. Researchers are actively working on addressing these challenges to further improve deep learning techniques.

Where can I learn more about deep learning foundations and concepts?

There are various online resources available to learn more about deep learning. You can find tutorials, courses, books, and research papers on platforms like Coursera, Udacity, edX, and arXiv. Additionally, many universities offer specialized deep learning programs and workshops.