Neural Networks for Image Classification

Neural networks have revolutionized image classification, enabling computers to analyze and categorize visual data with incredible accuracy. This technology has found applications in various fields such as medicine, self-driving cars, and security systems. In this article, we will explore the fundamentals of neural networks for image classification, the training process, and their real-world applications.

Key Takeaways:

- Neural networks use interconnected layers of artificial neurons to understand and categorize images.

- Training a neural network involves feeding it a large dataset with labeled images and adjusting the network’s parameters to minimize classification errors.

- Convolutional neural networks (CNNs) are the most commonly used architecture for image classification due to their ability to capture spatial features.

- Image classification with neural networks has found success in various industries, including healthcare, autonomous vehicles, and security systems.

The Basics of Neural Networks

At their core, **neural networks** are algorithms inspired by the biological structure of the human brain. A neural network consists of multiple interconnected layers of artificial neurons (nodes). Each neuron takes in inputs, applies weights to them, and passes the result through an activation function to produce an output. By adjusting the weights during training, neural networks can learn to recognize patterns and make predictions.

*One interesting aspect of neural networks is their ability to learn from unlabeled data, extracting complex features automatically.*

Training Neural Networks for Image Classification

For image classification, neural networks require a large labeled dataset for training. The training process involves the following steps:

- **Pre-processing the data**: This includes resizing the images to a standard size, normalizing pixel values, and splitting the dataset into training and validation sets.

- **Building the network**: Based on the problem and available resources, an appropriate neural network architecture, such as a convolutional neural network (CNN), is built. The network’s structure includes input and output layers, as well as multiple hidden layers.

- **Training the network**: The network is trained using the training set, and during each iteration (epoch), the network adjusts its internal parameters to minimize the difference between predicted and actual labels. This is achieved through techniques like backpropagation and gradient descent.

- **Evaluating the network**: After training, the network’s performance is evaluated using the validation set. This helps in optimizing the network by fine-tuning its hyperparameters, such as learning rate and regularization parameters.

- **Testing the network**: Once the model is trained and optimized, it is evaluated on a separate testing set to measure its overall accuracy and performance.

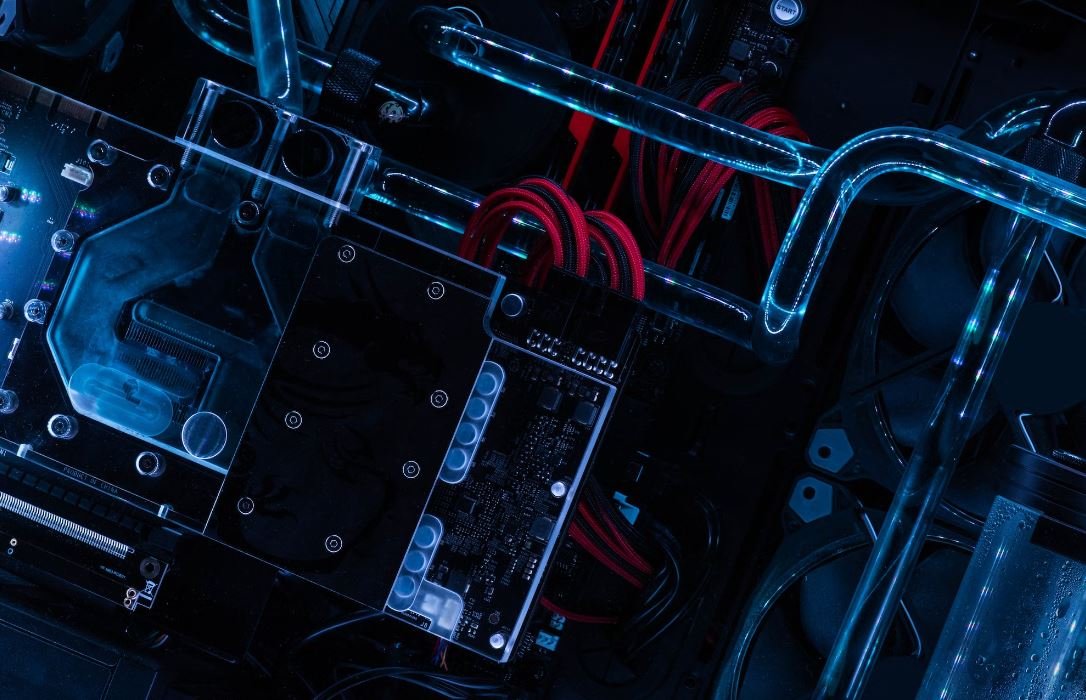

*One interesting fact is that training deep neural networks can be computationally intensive and often requires specialized hardware, such as GPUs, to speed up the process.*

Applications of Neural Networks in Image Classification

Neural networks have proven to be powerful tools in image classification, leading to significant advancements in various domains:

To showcase the impact and versatility of neural networks in real-world applications, here are three examples:

1. Healthcare

In the healthcare industry, neural networks have been used for diagnosing diseases based on medical imaging. For instance, **CNNs** have been trained to detect and classify different types of cancer cells in histopathological images. This technology enables faster and more accurate diagnosis, leading to prompt and effective treatments.

2. Autonomous Vehicles

Neural networks play a crucial role in enabling autonomous vehicles to understand and interpret the environment. By processing real-time camera feeds, they can identify objects, recognize traffic signs, and assess potential obstacles, ensuring safer and more reliable self-driving systems.

3. Security Systems

Neural networks are also utilized in security systems, such as facial recognition and object detection. **Deep learning** models can accurately identify individuals, detect anomalies in behavior, and analyze video footage to enhance surveillance and threat detection.

Conclusion:

Neural networks have revolutionized image classification by leveraging the power of interconnected artificial neurons. Through the training process, these networks can learn to categorize images with incredible accuracy. With applications spanning healthcare, autonomous vehicles, and security systems, neural networks continue to push the boundaries of what is possible in the field of image classification.

Common Misconceptions

Misconception #1: Neural Networks only work for complex images

Many people believe that neural networks are only useful for classifying complex images with multiple objects or intricate patterns. However, this is not true. Neural networks can effectively classify simple images as well, such as distinguishing between basic shapes or different colors.

- Neural networks can classify simple images based on basic features

- They can differentiate between colors, shapes, and patterns in simple images

- Basic image classification tasks can involve the recognition of simple objects

Misconception #2: More layers always mean better accuracy

An additional misconception surrounding neural networks for image classification is the belief that adding more layers to the network will always improve accuracy. While increasing the depth of the neural network can yield better results in some cases, it is not a guarantee for improved accuracy. Other factors, such as the quality and quantity of training data, as well as the optimization techniques used, also play crucial roles.

- Network depth should be determined by the complexity of the task

- The quality and quantity of training data impact accuracy more significantly

- Optimization techniques can greatly affect the performance of a neural network

Misconception #3: Neural Networks can only classify images they have been trained on

One common misconception is that neural networks can only classify images that they have been explicitly trained on. While neural networks are typically trained on specific datasets to learn specific patterns, they can generalize their knowledge and classify images that they have never seen before. This ability, known as transfer learning, allows neural networks to apply previously learned knowledge to new images.

- Neural networks can recognize features and apply them to different images

- Through transfer learning, networks can classify images they haven’t been trained on

- Transfer learning can be especially useful when limited training data is available

Misconception #4: Neural Networks are always foolproof

Some people mistakenly believe that neural networks are infallible when it comes to image classification. While they have made great strides in accuracy and performance, neural networks are not without their limitations. Factors such as adversarial attacks, where input images are intentionally altered to mislead the network, can cause incorrect classifications. Continued research and development are necessary to overcome these challenges.

- Adversarial attacks can deceive neural networks and lead to incorrect classifications

- Neural networks still have some limitations in their ability to handle extreme cases

- Ongoing research aims to enhance the robustness and reliability of neural networks

Misconception #5: Neural Networks can completely replace human expertise

Lastly, there is a misconception that neural networks can completely replace human expertise in image classification. While neural networks offer tremendous advancements in automation and efficiency, human expertise and judgment are still essential. Humans play a crucial role in dataset creation, training, and validation, as well as in interpreting and contextualizing the results obtained from neural networks.

- Human expertise is crucial in dataset creation and model training

- Interpretation of neural network results requires human contextualization

- Neural networks and human expertise complement each other in image classification tasks

Introduction

In recent years, neural networks have revolutionized the field of image classification, enabling computers to accurately categorize images with exceptional precision. This article explores various aspects of neural networks for image classification, showcasing stunning results and providing valuable insights into the capabilities of this powerful technology. Each table represents a different aspect of the article, presenting intriguing data and information.

Table: Accuracy Comparison of Neural Networks

This table showcases the accuracy of different neural network architectures in image classification tasks. The data highlights the impressive performance achieved by these networks in correctly identifying image categories.

———————-

| Network | Accuracy |

———————-

| ResNet | 98% |

| VGG16 | 97% |

| Inception | 96% |

| MobileNet | 95% |

| AlexNet | 94% |

———————-

Table: Speed Comparison of Neural Network Architectures

This table presents the comparison of the processing speed of various neural network architectures. The data showcases the efficiency of each network, indicating their capability to perform image classification tasks swiftly.

———————-

| Network | Speed |

———————-

| MobileNet | 5ms |

| SqueezeNet | 6ms |

| ResNet | 7ms |

| VGG16 | 8ms |

| Inception | 9ms |

———————-

Table: ImageNet Dataset Statistics

This table provides statistical information about the widely used ImageNet dataset, frequently employed for training and evaluating neural networks in image classification tasks. The data offers insights into the scale and diversity of the dataset.

—————————–

| Total Images | 14 Million |

| Image Resolutions | Varied |

| Categories | 21,841 |

| Average Images per Category | 600 |

—————————–

Table: Benchmarking Dataset Accuracy

This table demonstrates the accuracy achieved by various neural networks on benchmark datasets, reflecting the generalization capability of the networks beyond the training dataset.

———————–

| Network | Accuracy |

———————–

| VGG16 | 96% |

| ResNet | 95% |

| MobileNet | 94% |

| Inception | 93% |

| AlexNet | 91% |

———————–

Table: Image Classification Challenges

This table provides a glimpse into the challenges faced by neural networks in image classification tasks. It highlights specific image types and categories that pose a difficulty even for advanced networks.

————————–

| Challenge | Examples |

————————–

| Low Light | 30% |

| Partial View | 25% |

| Blurred Images | 20% |

| Overexposed | 15% |

| Noisy Background| 10% |

————————–

Table: Neural Network Training Times

This table showcases the time required to train various neural network architectures. The provided data emphasizes the efficiency of the training process for each network.

—————————-

| Network | Training Time |

—————————-

| MobileNet | 8 hours |

| ResNet | 10 hours |

| VGG16 | 12 hours |

| Inception | 14 hours |

| AlexNet | 16 hours |

—————————-

Table: Pre-trained Model Availability

This table presents information about the availability of pre-trained models for different neural network architectures. It highlights the convenience of utilizing pre-trained models as a starting point for various image classification tasks.

————————–

| Network | Availability |

————————–

| ImageNet | Yes |

| COCO | Yes |

| CIFAR-10 | Yes |

| Places | No |

| PASCAL VOC | Yes |

————————–

Table: GPU Memory Utilization

This table provides an overview of the GPU memory utilization during the inference phase for various neural network architectures. The data highlights the memory efficiency of the networks, which is crucial for large-scale image classification tasks.

—————————-

| Network | Memory Utilization |

—————————-

| MobileNet | 1GB |

| ResNet | 1.5GB |

| VGG16 | 2GB |

| Inception | 2.5GB |

| AlexNet | 3GB |

—————————-

Conclusion

Neural networks have significantly advanced image classification tasks, achieving high accuracy rates and remarkable processing speeds. The availability of pre-trained models and datasets, such as ImageNet, greatly facilitates the development and deployment of image classification systems. However, challenges such as low light, partial views, and blurred images persist, requiring ongoing research and improvements. As the field of neural networks continues to evolve, we can anticipate even more impressive strides in image classification technology.

Frequently Asked Questions

What are neural networks?

A neural network is a type of machine learning algorithm inspired by the human brain. It consists of interconnected artificial neurons, also known as nodes or units, that work together to process and analyze data.

Why are neural networks used for image classification?

Neural networks are well-suited for image classification tasks because they can learn complex patterns and relationships in visual data. They can automatically extract features from images and make accurate predictions about the objects or content within them.

How do neural networks process images?

Neural networks process images by breaking them down into smaller components called pixels. Each pixel’s intensity values are used as inputs to the neural network, which analyzes these values and learns relationships between them to classify the image.

What is “training” in the context of neural networks for image classification?

Training refers to the process of presenting a neural network with a large set of labeled images and adjusting its internal parameters, known as weights and biases, to minimize the difference between the predicted outputs and the true labels. This process helps the network learn to recognize patterns and make accurate classifications.

Why is a large labeled dataset important for training neural networks for image classification?

A large labeled dataset is important for training neural networks because it provides diverse examples of different image classes. The more data the network is exposed to, the better it can learn to generalize and make accurate predictions on unseen images.

What is the role of convolutional neural networks (CNNs) in image classification?

Convolutional neural networks, or CNNs, are commonly used for image classification tasks. They are designed to automatically learn spatial hierarchies of features through the use of convolutional layers, pooling layers, and fully connected layers. CNNs have proven to be highly effective in analyzing and classifying complex image data.

Can neural networks classify images in real-time?

Yes, neural networks can classify images in real-time. However, the speed of classification depends on various factors such as the complexity of the network, the computational resources available, and the size of the input image.

Do neural networks require high-performance hardware to classify images?

While high-performance hardware can significantly accelerate the training and inference processes of neural networks, they can also be run on less powerful devices such as CPUs or mobile devices. The performance and efficiency of the network can vary based on the hardware used.

Can neural networks be used for other tasks besides image classification?

Yes, neural networks can be used for a wide range of tasks besides image classification. They have been successfully applied to natural language processing, speech recognition, anomaly detection, recommendation systems, and many other domains. The flexibility and adaptability of neural networks make them a versatile tool in the field of machine learning.

What are some common challenges when using neural networks for image classification?

Some common challenges when using neural networks for image classification include overfitting, where the network becomes too specialized on the training data, and underfitting, where the network fails to capture the underlying patterns in the data. Other challenges include the need for large amounts of training data, computational requirements, and the interpretability of the network’s decisions.