Neural Net Equation

Artificial Intelligence has become a significant part of technology advancements, with neural networks being at the forefront of AI research and development. Understanding how neural networks function and their underlying mathematical equations is crucial in comprehending their applications and potential. In this article, we will explain the neural net equation in a simplified manner to aid your understanding of this fundamental concept in AI.

Key Takeaways:

- Neural networks are a central component of artificial intelligence.

- The neural net equation forms the basis of neural network computations.

- Understanding the neural net equation helps in AI applications and development.

What Is the Neural Net Equation?

The neural net equation is a mathematical representation of a neural network’s computational process. *It calculates how inputs are transformed into output predictions, providing the network with the ability to learn and make intelligent decisions.* Neural networks consist of interconnected nodes called neurons that simulate the behavior of biological neurons and process information based on mathematical computations performed by the neural net equation.

The Components of the Neural Net Equation

The neural net equation consists of multiple components, including:

- Input layer: Receives the initial inputs.

- Weights: Assigns importance to the inputs.

- Bias: Adjusts the output of the neurons.

- Hidden layers: Intermediate layers between input and output layers.

- Activation function: Introduces non-linearity to the network.

- Output layer: Gives the final prediction or output.

*Each component plays a vital role in the neural net equation’s computations and contributes to the network’s ability to process and learn from complex data patterns.*

The Mathematical Formula

The neural net equation is often expressed as:

Output = Activation Function(Sum(weight * input) + bias)

This formula represents the weighted sum of inputs, adjusted by biases and passed through an activation function. *It quantifies the relationship between the inputs and the output, allowing the neural network to process and analyze data efficiently.*

Tables

The following tables demonstrate various aspects of the neural net equation and its components:

| Component | Description |

|---|---|

| Input Layer | The initial layer that receives input data. |

| Weights | Values that assign importance to different inputs. |

| Bias | An additional value that adjusts the output of a neuron. |

| Component | Description |

|---|---|

| Hidden Layers | Intermediate layers between the input and output layers. |

| Activation Function | A mathematical function introducing non-linearity to the network. |

| Output Layer | The final layer providing the network’s prediction or output. |

| Component | Mathematical Formula |

|---|---|

| Output | Activation Function(Sum(weight * input) + bias) |

Applications of the Neural Net Equation

The neural net equation is the foundation for various AI applications, including:

- Image recognition

- Natural language processing

- Speech recognition

- Recommendation systems

*These applications utilize neural networks’ ability to learn from vast amounts of data and make accurate predictions based on learned patterns and relationships.*

Conclusion

Understanding the neural net equation is essential for grasping the fundamental workings of neural networks and their applications in AI. By comprehending the mathematical representation and components, you can utilize this knowledge to explore AI development, uncover new possibilities, and harness the power of artificial intelligence.

Common Misconceptions

Neural Net Equation

Neural net equations are often misunderstood due to their complexity. Let’s debunk some common misconceptions people have about them:

Misconception: Neural net equations are only useful for advanced programmers

- Neural net equations can be understood and used by programmers at different skill levels.

- Various libraries, tools, and frameworks are available that simplify the implementation of neural net equations.

- Many online tutorials and courses exist to help beginners learn and apply neural net equations effectively.

Misconception: Neural net equations always produce accurate results

- Like any other mathematical model, neural net equations can generate erroneous outputs without proper training and validation.

- The accuracy of neural net equations depends on the quality of the training data and the architectural design of the network.

- No single neural net equation is universally perfect for all applications; parameter fine-tuning and customization are often necessary for optimal results.

Misconception: Neural net equations possess human-like intelligence

- Although neural net equations can perform complex tasks, they lack true understanding and reasoning capabilities.

- Neural nets excel at pattern recognition and prediction, but they do not possess consciousness or subjective experience.

- Neural net equations are based on statistical models and probabilities rather than human-like cognition.

Misconception: Neural net equations can replace human judgment and expertise

- While neural net equations are powerful tools, they should be used as aids to human decision-making rather than complete replacements.

- Human judgment and expertise are crucial for interpreting the outputs of neural net equations and making contextually appropriate decisions.

- Applying neural net equations without considering the ethical, legal, and social implications can lead to unintended consequences.

Misconception: Neural net equations always require massive computational resources

- In recent years, advancements in hardware and algorithms have made it possible to train neural net equations with moderate computational resources.

- Pruning techniques, model compression, and parallel processing can be used to minimize the computational requirements of neural nets.

- Simple neural net equations with fewer layers and connections can be efficiently run on devices with limited computing power, such as smartphones.

Neural Network Architectures

Neural networks have revolutionized various fields, including image recognition, natural language processing, and autonomous driving. Different architectures are used in neural networks, each with its unique characteristics and applications. The following table presents a comparison of popular neural network architectures:

| Architecture | Applications | Advantages | Disadvantages |

|---|---|---|---|

| Convolutional Neural Network (CNN) | Image recognition, object detection | Robust to variations in input, highly parallelizable | Requires large amounts of training data |

| Recurrent Neural Network (RNN) | Natural language processing, speech recognition | Handles sequential data, captures temporal dependencies | Susceptible to vanishing/exploding gradients |

| Long Short-Term Memory (LSTM) | Language translation, sentiment analysis | Remembers long-term dependencies, mitigates vanishing/exploding gradients | Computationally more expensive than standard RNNs |

| Generative Adversarial Networks (GANs) | Image synthesis, realistic image generation | Creates realistic data samples, unsupervised learning | Training can be unstable, may suffer from mode collapse |

| Transformer | Machine translation, question answering | Efficient attention mechanism, parallel computation | Requires large computational resources |

Activation Functions

Activation functions play a crucial role in neural networks by introducing non-linearity, enabling complex input-output mappings. The following table outlines different activation functions:

| Activation Function | Range | Advantages | Disadvantages |

|---|---|---|---|

| Step Function | [0,1] | Simple and interpretable | Gradient is not defined, limited expressiveness |

| ReLU (Rectified Linear Unit) | [0,∞) | Faster convergence, avoids vanishing gradients | Dead neurons, output not zero-centered |

| Sigmoid | (0,1) | Smooth gradient, outputs probabilities | Vanishing gradients, not zero-centered |

| Tanh (Hyperbolic Tangent) | (-1,1) | Bounds output, zero-centered | Vanishing gradients |

| Softmax | [0,1] (normalized) | Outputs probabilities | Sum of output values is always 1, normalization dependency |

Training Algorithms

Training algorithms determine how neural networks learn from the data. Here are some popular training algorithms:

| Algorithm | Advantages | Disadvantages |

|---|---|---|

| Backpropagation | Widely used, efficient | May converge to local minima, sensitive to initialization |

| Stochastic Gradient Descent (SGD) | Computationally efficient, low-memory requirements | May get stuck in local minima, sensitive to learning rate |

| Adam | Adaptive learning rate, efficient for large datasets | May require tuning of hyperparameters, not suitable for small datasets |

| Adagrad | Adapts learning rates for each parameter | Learning rate monotonically decreasing, accumulates squared gradients |

| RMSProp | Adapts learning rates with exponential smoothing | Requires careful tuning of hyperparameters |

Loss Functions

Loss functions quantify the discrepancy between predicted and actual values, guiding the learning process. Let’s explore some common loss functions:

| Loss Function | Range | Advantages | Disadvantages |

|---|---|---|---|

| Mean Squared Error (MSE) | [0,∞) | Commonly used, differentiable, penalizes large errors | Sensitive to outliers |

| Binary Cross-Entropy | (0,∞) | Applicable for binary classification | May suffer from vanishing gradients |

| Categorical Cross-Entropy | (0,∞) | Applicable for multi-class classification | Requires one-hot encoding of targets |

| Huber Loss | [0,∞) | Robust to outliers, combines L1 and L2 losses | Requires tuning of hyperparameter |

| Kullback-Leibler Divergence | [0,∞) | Measures difference between probability distributions | Not symmetric, may increase computational complexity |

Regularization Techniques

To prevent overfitting and improve generalization, regularization techniques are employed. Let’s explore some widely used techniques:

| Technique | Advantages | Disadvantages |

|---|---|---|

| L1 Regularization (Lasso) | Performs feature selection, sparse models | More computationally expensive, solution not unique |

| L2 Regularization (Ridge) | Implicit feature selection, better conditioning, stable solutions | Less effective for feature selection than L1 regularization |

| Dropout | Reduces overfitting, ensemble-like behavior | Increases training time, not compatible with all architectures |

| Data Augmentation | Increases dataset size, reduces overfitting | Requires domain-specific knowledge, additional computational resources |

| Early Stopping | Prevents overfitting, saves computational resources | Requires careful determination of stopping point |

Optimization Techniques

Optimization techniques are employed to efficiently find the optimal weights and biases of a neural network. Let’s explore some common optimization techniques:

| Technique | Advantages | Disadvantages |

|---|---|---|

| Gradient Descent | Baseline optimization method | Slow convergence, may get stuck in local minima |

| Momentum | Accelerates convergence, escapes shallow minima | Hyperparameter tuning required |

| Adam | Combines adaptive learning rates and momentum | Requires selection of hyperparameters |

| RMSProp | Adapts learning rates with exponential smoothing, handles sparse gradients | Requires careful hyperparameter tuning |

| Adagrad | Adapts learning rates for each feature independently | Learning rate monotonically decreasing, accumulates squared gradients |

Pretrained Models

Pretrained models are pre-trained neural networks that can be fine-tuned for specific tasks. They offer significant advantages in terms of time, resources, and performance. Here are some popular pretrained models:

| Model | Architecture | Applications | Performance |

|---|---|---|---|

| VGG16 | CNN | Image classification, object detection | Top-5 accuracy of 92.3% |

| BERT | Transformer | Natural language processing, question answering | State-of-the-art performance on various tasks |

| YOLOv4 | CNN | Object detection, real-time video analysis | High accuracy and impressive speed |

| GPT-3 | Transformer | Language generation, chatbots | Massively powerful and capable of generating human-like text |

| ResNet50 | CNN | Image classification, feature extraction | Highly accurate and widely used |

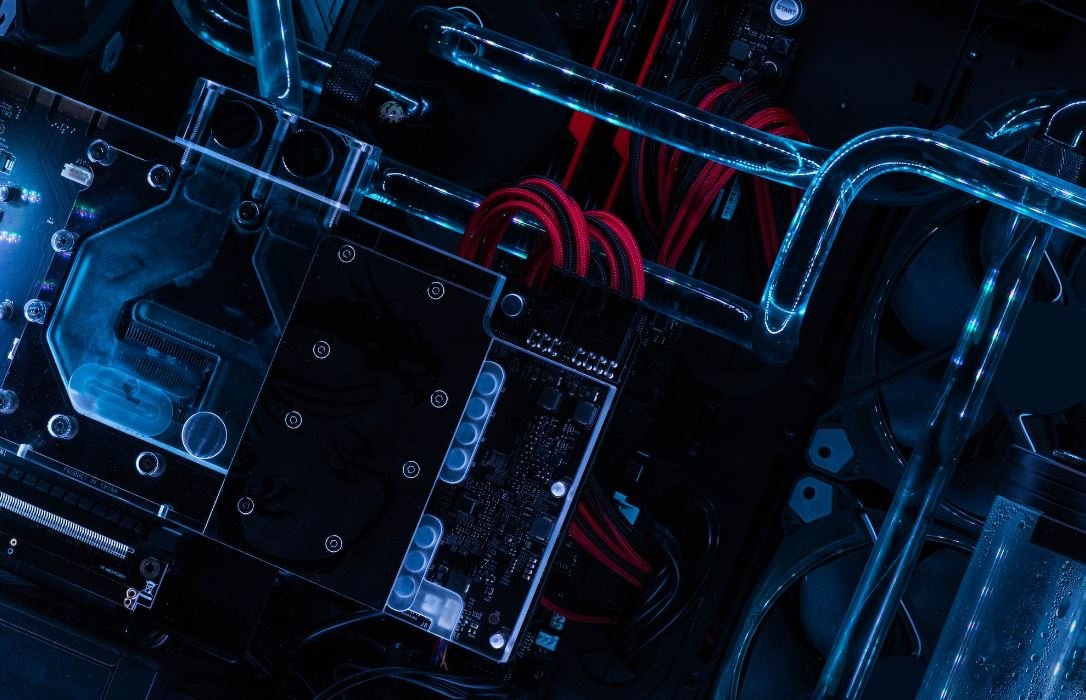

Hardware Acceleration

Accelerating neural network computations is crucial for real-time and resource-constrained applications. Hardware acceleration provides significant performance gains. Here are some popular hardware acceleration options:

| Technology | Advantages | Disadvantages |

|---|---|---|

| Graphics Processing Unit (GPU) | Parallel processing, high performance | Higher power consumption, expensive |

| Tensor Processing Unit (TPU) | Highly optimized for neural network computations | Less flexibility than GPUs |

| Field-Programmable Gate Array (FPGA) | Hardware customization, low power consumption | Higher development complexity |

| Application-Specific Integrated Circuit (ASIC) | Highly efficient for specific tasks, low power consumption | Expensive to design and manufacture |

| Neuromorphic Processors | Low power consumption, mimics biological neural networks | Less mature technology, limited availability |

Ethical Considerations

While neural networks offer remarkable capabilities, they also pose ethical concerns. It is crucial to consider the following aspects:

| Aspect | Considerations |

|---|---|

| Privacy | Protection of personal data, potential misuse |

| Biases | Addressing racial, gender, and other biases in training and deployment |

| Transparency | Understanding and interpreting the decisions made by neural networks |

| Accountability | Establishing responsibility for AI-driven outcomes |

| Job Displacement | Impact on employment and the need for reskilling and job creation |

Conclusion

Understanding neural networks, their components, and their applications is crucial in today’s data-driven world. This article delved into various aspects of neural networks, including different architectures, activation functions, training algorithms, loss functions, regularization techniques, optimization techniques, pretrained models, hardware acceleration options, and ethical considerations. By combining these elements effectively, researchers and practitioners can develop powerful AI systems that contribute to solving complex problems and advancing society.

Frequently Asked Questions

Neural Net Equation

Questions

What is a neural net equation?

How does a neural net equation work?

What are the components of a neural net equation?

What are the popular activation functions used in neural net equations?

How do you calculate the output of a neural net equation?

What is backpropagation in neural net equations?

Can neural net equations handle both regression and classification problems?

What are the advantages of using neural net equations?

What are the limitations of neural net equations?

How can neural net equations be implemented in code?

Answers

What is a neural net equation?