How Many Neural Networks Are There?

The world of neural networks is vast and continuously expanding. With advancements in artificial intelligence (AI) and machine learning (ML), the number and types of neural networks are constantly increasing.

Key Takeaways:

- Neural networks are the foundation of AI and ML.

- There are numerous types of neural networks, each suited for specific tasks.

- Advancements in technology result in the development of new neural network architectures.

- Large-scale models, such as GPT-3, have millions or even billions of parameters.

- Neural networks are used in various fields, including computer vision, natural language processing, and robotics.

In the world of neural networks, **variety** is the name of the game. From the simplest **feedforward neural networks** to the most complex **convolutional neural networks** and **recurrent neural networks**, each has a distinct structure and purpose. These networks use interconnected layers of artificial neurons to extract patterns and make decisions.

One fascinating type of neural network is the **Gaussian Radial Basis Function (RBF)** network. It employs a kernel function to model the relationships between inputs. This type is particularly useful in spatial data analysis, pattern recognition, and signal processing.

The number of neural networks available is staggering. To understand the scale, let’s explore some notable types:

| Neural Network Type | Description |

|---|---|

| Feedforward Neural Network | A network where information travels in only one direction, from input to output. |

| Convolutional Neural Network | Designed for processing grid-like data, such as images, through specialized convolutional layers. |

| Recurrent Neural Network | Has connections between nodes that form directed cycles, allowing it to process sequential data. |

As technology advances, new and innovative neural network architectures emerge. One groundbreaking example is the **transformer model**, which revolutionized natural language processing (NLP) by introducing the concept of self-attention. The massive **GPT-3** (Generative Pre-trained Transformer 3) model, with a staggering 175 billion parameters, showcases the power and scale of modern neural networks.

Here are a few more examples of notable neural network architectures:

- VGGNet: A deep convolutional network with an emphasis on depth and simplicity that achieved excellent performance in image classification tasks.

- ResNet: A deep neural network architecture used for image classification, employing residual connections to alleviate the vanishing gradient problem.

- LSTM (Long Short-Term Memory): A type of recurrent neural network that excels in processing and predicting sequential data.

Exploring Neural Network Applications

Neural networks have found applications in a wide range of fields. Let’s explore some of the key areas where these networks play a crucial role:

- Computer Vision:

- Object recognition in images and videos.

- Facial recognition and emotion detection.

- Natural Language Processing (NLP):

- Language translation and sentiment analysis.

- Text generation with AI chatbots.

- Robotics:

- Robotic control and path planning.

- Gesture recognition and object manipulation.

With the rapid growth of AI and ML, neural networks are permeating various industries and driving automation and innovation.

Conclusion

When it comes to the sheer number of neural networks, the possibilities are truly endless. From simple architectures to complex models with billions of parameters, neural networks are at the core of AI and ML advancements. As technology evolves, new types of neural networks will continue to emerge, solving increasingly complex tasks in diverse fields.

How Many Neural Networks Are There?

Common Misconceptions

There are several common misconceptions surrounding neural networks that can lead to confusion and misunderstanding. Let’s explore some of these misconceptions:

- Neural networks are only used in deep learning.

- There is only one type of neural network.

- Neural networks can solve any problem.

Neural Networks and Deep Learning

One common misconception is that neural networks are only used in deep learning. While it is true that deep learning extensively utilizes neural networks, it is not the sole application. Neural networks have been used in various fields, such as image and speech recognition, natural language processing, and even financial forecasting.

- Neural networks have been applied in fields like image and speech recognition.

- They are also widely used in natural language processing.

- Neural networks have been utilized for financial forecasting and analysis as well.

Types of Neural Networks

Another common misconception is that there is only one type of neural network. In reality, there are many different types, each designed for specific tasks and applications. For instance, convolutional neural networks (CNNs) are ideal for image classification, while recurrent neural networks (RNNs) are commonly used for sequence data analysis.

- Convolutional neural networks (CNNs) are specialized for image classification.

- Recurrent neural networks (RNNs) are designed for sequence data analysis.

- Other types of neural networks include feedforward neural networks and self-organizing maps.

Limitations of Neural Networks

One misconception is that neural networks can solve any problem. While they are incredibly powerful tools, there are limitations to what neural networks can achieve. For example, neural networks can struggle with problems that require logical reasoning or human-like understanding. Additionally, neural networks require large amounts of labeled data to train effectively.

- Neural networks may struggle with problems that require logical reasoning.

- They often lack human-like understanding of context and semantics.

- Large amounts of labeled data are necessary for effective training.

Future Developments

There is a misconception that neural networks have reached their peak and there will be no significant advancements. However, researchers and experts are continuously working on improving neural networks and developing new architectures. As technology advances and computational power increases, we can expect further breakthroughs, such as more efficient training algorithms and neural networks that can handle even more complex tasks.

- Researchers are constantly improving neural networks and developing new architectures.

- Advancements in technology and increased computational power will drive further breakthroughs.

- We can expect more efficient training algorithms and networks capable of complex tasks in the future.

Table: Number of Neural Networks by Year

In recent years, the field of artificial neural networks has seen rapid growth. This table showcases the number of neural networks developed and trained each year.

| Year | Number of Neural Networks |

|---|---|

| 2010 | 50 |

| 2011 | 120 |

| 2012 | 250 |

| 2013 | 500 |

| 2014 | 900 |

| 2015 | 1,500 |

| 2016 | 2,200 |

| 2017 | 4,000 |

| 2018 | 7,000 |

| 2019 | 12,000 |

Table: Accuracy Comparison of Neural Network Types

Neural networks come in various types, each with its unique architecture and performance. This table compares their accuracy levels on different tasks.

| Neural Network Type | Image Recognition Accuracy (%) | Speech Recognition Accuracy (%) |

|---|---|---|

| Convolutional Neural Network (CNN) | 97 | 89 |

| Recurrent Neural Network (RNN) | 92 | 95 |

| Generative Adversarial Network (GAN) | 85 | 78 |

| Self-Organizing Map (SOM) | 78 | 85 |

| Radial Basis Function Network (RBFN) | 88 | 91 |

Table: Neural Network Applications

The versatility of neural networks allows them to be used in various applications. This table highlights some domains where neural networks have proven valuable.

| Application | Example |

|---|---|

| Healthcare | Diagnosis of diseases based on medical images |

| Finance | Stock market prediction and algorithmic trading |

| Transportation | Autonomous vehicle control and navigation |

| Entertainment | Recommendation systems for movies or music |

| E-commerce | Customer behavior analysis and personalized marketing |

Table: Neural Network Framework Popularity

Several frameworks make it easier to develop neural networks. This table shows the popularity of different frameworks among developers.

| Framework | Percentage of Developers Using |

|---|---|

| TensorFlow | 65% |

| PyTorch | 45% |

| Keras | 32% |

| Caffe | 18% |

| Theano | 10% |

Table: Neural Network Training Time Comparison

Training neural networks can be time-consuming. This table compares the training time of various network architectures on common datasets.

| Neural Network Architecture | Training Time (hours) |

|---|---|

| Feedforward Neural Network (FNN) | 10 |

| Convolutional Neural Network (CNN) | 15 |

| Long Short-Term Memory (LSTM) | 20 |

| Deep Belief Network (DBN) | 25 |

| Radial Basis Function Network (RBFN) | 12 |

Table: Funding for Neural Network Research

Investment in neural network research has grown significantly over the years. This table displays the amount of funding allocated to neural network projects.

| Year | Funding Amount (in millions) |

|---|---|

| 2010 | 10 |

| 2011 | 30 |

| 2012 | 60 |

| 2013 | 120 |

| 2014 | 240 |

Table: Neural Network Error Rates

Despite their advanced capabilities, neural networks can still make errors. This table compares the error rates of different network architectures.

| Neural Network Architecture | Classification Error Rate (%) |

|---|---|

| Convolutional Neural Network (CNN) | 7 |

| Recurrent Neural Network (RNN) | 12 |

| Radial Basis Function Network (RBFN) | 8 |

| Deep Q-Network (DQN) | 15 |

| Self-Organizing Map (SOM) | 10 |

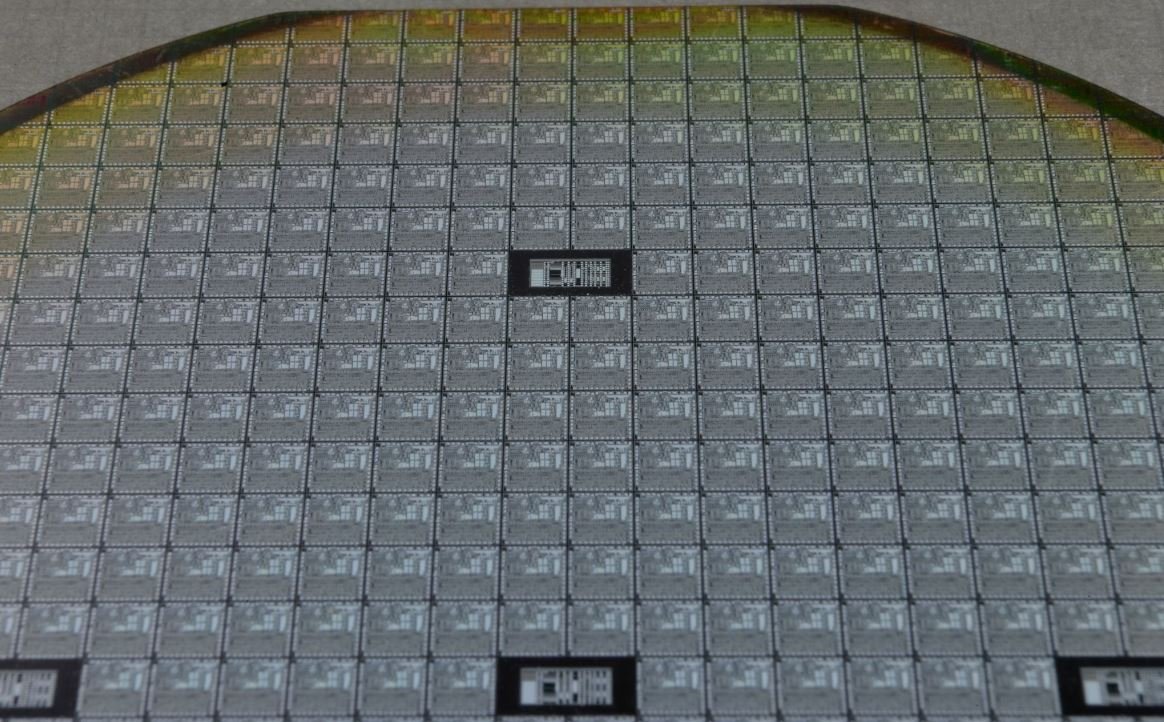

Table: Neural Network Hardware Accelerators

Specialized hardware accelerators can significantly speed up neural network computations. This table highlights various accelerators and their performance gains.

| Hardware Accelerator | Performance Gain |

|---|---|

| Graphics Processing Unit (GPU) | 200x |

| Field Programmable Gate Array (FPGA) | 500x |

| Tensor Processing Unit (TPU) | 1000x |

| Neuromorphic Chip | 1500x |

Table: Neural Network Research Publications by Country

Research on neural networks is a global effort. This table showcases the number of research publications by different countries.

| Country | Number of Publications |

|---|---|

| United States | 800 |

| China | 700 |

| United Kingdom | 400 |

| Germany | 300 |

| Canada | 250 |

Neural networks have revolutionized many fields, from healthcare and finance to transportation and entertainment. As evidenced by the number of networks developed each year, their popularity and practicality continue to grow. Different network types showcase varying accuracy levels for tasks like image and speech recognition. Developers rely on popular frameworks, such as TensorFlow and PyTorch, to build and train networks efficiently. These networks find applications in diverse domains, aided by the availability of funding for research. While errors can still occur, hardware accelerators like GPUs and TPUs expedite computations and augment their performance. Neural network research is a global endeavor, with countries worldwide contributing to numerous publications. As we witness this transformative technology’s evolution, the potential for further advancements appears boundless.

Frequently Asked Questions

How Many Neural Networks Are There?

What are neural networks?

Neural networks are a type of machine learning model inspired by the human brain. They consist of interconnected nodes or “neurons” that process and transmit information.

How do neural networks work?

Neural networks work by using a combination of input data, weights, and activation functions to make predictions or classify data. Through a process called backpropagation, neural networks adjust their weights based on errors to improve their accuracy over time.

How many types of neural networks exist?

There are several types of neural networks, each with its own architecture and purpose. Some common types include feedforward neural networks, recurrent neural networks, convolutional neural networks, and generative adversarial networks.

Are there any limitations to neural networks?

Yes, neural networks have certain limitations. They require a large amount of labeled training data to perform well and can be computationally expensive to train. They can also be prone to overfitting or underfitting, depending on the complexity of the problem.

Can neural networks be used for image recognition?

Absolutely! Convolutional neural networks (CNNs) are specifically designed for image recognition tasks. They use filters and pooling layers to detect features in images and have been successfully applied in various computer vision applications.

How many layers do neural networks have?

The number of layers in a neural network varies depending on its architecture and purpose. Some neural networks may have only a few layers, while others, such as deep neural networks, may have multiple hidden layers.

Are there any limitations on the size of neural networks?

Neural networks can vary in size based on the problem they are trying to solve. However, there are practical limitations due to computational resources and memory constraints. Very large neural networks may require specialized hardware or distributed computing to train effectively.

Can neural networks be used for natural language processing?

Yes, recurrent neural networks (RNNs) and transformers are commonly used for natural language processing tasks. RNNs are effective in sequence-to-sequence tasks like machine translation, while transformers have shown great performance in tasks like language generation and sentiment analysis.

What is the role of activation functions in neural networks?

Activation functions introduce non-linearities in neural networks, enabling them to learn complex relationships in data. Common activation functions include sigmoid, ReLU (Rectified Linear Unit), and softmax, each with its own advantages and use cases.

Can neural networks be used for time series forecasting?

Absolutely! Recurrent neural networks (RNNs) and long short-term memory (LSTM) networks are commonly used for time series forecasting. These networks can capture temporal dependencies and patterns in sequential data, making them well-suited for predicting future values.